Notes for CSCI 184.03

Lecture notes by Brian Guadalupe

This is the online version of the PDF lecture notes I created for this course. These have been converted from the original LaTeX source files to Markdown using Pandoc, and transformed into an online book using mdbook. Some parts have been modified to add interactivity, e.g., using SageMathCell for Sage code.

These online notes still have rough edges. If you see any typos or issues, please let me know!

Contents

-

Introduction

-

Math Background

-

Symmetric-Key Cryptography

-

Public-Key Cryptography

References

These notes are, for the most part, based on these references:

- Jean-Philippe Aumasson. Serious Cryptography: A Practical Introduction to Modern Encryption. No Starch Press, 2017.

- Jonathan Katz and Yehuda Lindell. Introduction to Modern Cryptography. CRC Press, 3rd edition, 2021.

- David Wong. Real-World Cryptography. Manning Publications, 2021.

Introduction

Relevant readings: Katz and Lindell 1.1–1.4

What is cryptography?

A dictionary definition for cryptography would say something like “the art of writing and solving codes.” Historically, cryptography focused exclusively on ensuring private communication between two parties sharing secret information in advance using codes. It was seen as an art, in the sense that it required a lot of creativity to come up with good codes or break existing ones. This is what we refer to as classical cryptography.

Modern cryptography, on the other hand, has grown to be much more than merely what the dictionary definition portrays it to be. In addition to encryption, security mechanisms such as for integrity, key exchange, and authentication now become of interest. It is now seen as more of a science than an art, due to a “rich theory” emerged in the 1970s that allowed the rigorous study of cryptography as its own discipline. In summary:

-

Classical cryptography concerns about hidden writing, while

-

Modern cryptography concerns about provable security.

Setting the scene

Much of classical cryptography is concerned with designing codes, which we call today as cryptosystems or encryption schemes. Classical cryptosystems rely on the security of the key that is shared by both parties in advance, in which case we call this as the private-key setting.

To make this scenario more concrete, we will introduce some people. Let’s say Alice wants to send a message \(m\) privately to Bob. We call \(m\) the plaintext. Of course Alice won’t send the actual \(m\), but she will transform the plaintext into a value \(c\) (called the ciphertext), and send that \(c\) to Bob. This process is called encryption, and it is usually done by running an encryption algorithm called . Upon receiving \(c\) from Alice, Bob then runs the decryption algorithm to recover the original plaintext \(m\).

Additionally, we assume that an eavesdropper named Eve can observe the ciphertext \(c\), so the goal is for the ciphertext to be meaningless to everyone else except for Bob.

Using generic descriptions for parties such as “first person,” “receiver,” or “eavesdropper,” gets cumbersome really quick, so we use names such as Alice and Bob (and many others) to explain cryptographic mechanisms more clearly. In fact, they were introduced with the publication of the original paper describing the RSA cryptosystem in the 1980s, so these names became pretty much standardized across all cryptographic literature.

The encryption syntax

A private-key encryption scheme \(\Pi\) is defined by specifying a message space \(\mathcal{M}\) along with three algorithms: a procedure for generating keys (\(\GEN{}\)), a procedure for encrypting (\(\ENC{}\)), and a procedure for decrypting (\(\DEC{}\)). These algorithms are more formally defined as follows:

-

The key-generation algorithm \(\GEN{}\) is a randomized algorithm that outputs a key \(k\) chosen according to some distribution.

-

The encryption algorithm \(\ENC{}\) takes as input a key \(k\) and a message \(m\) and outputs a ciphertext \(c\). We denote the encryption of the plaintext \(m\) using the key \(k\) by \(\Enc{k}{m}\).

-

The decryption algorithm \(\DEC{}\) takes as input a key \(k\) and a ciphertext \(c\) and outputs a plaintext \(m\). We denote the decryption of the ciphertext \(c\) using the key \(k\) by \(\Dec{k}{c}\).

We call the set of all possible keys that can be produced by the key-generation algorithm as the key space, denoted by \(\mathcal{K}\). We can assume that uniformly chooses a key from the key space.

For an encryption scheme to work properly, encrypting a message and then decrypting the resulting ciphertext using the same key should yield the original message, so: $$\Dec{k}{\Enc{k}{m}} = m$$ for every key \(k \in \mathcal{K}\) and every message \(m \in \mathcal{M}\).

Kerckhoffs’ principle

If Eve gets a hold of the decryption algorithm \(\DEC{}\) and the key \(k\), then it’s game over. Thus it makes sense to ensure that the key is being shared securely and completely hidden from everyone else. While we’re at it, why not make \(\DEC{}\) (and maybe \(\ENC{}\)) also completely hidden?

This was the way cryptography was being done in the past 2000 years or so, but it turns out that it would be a very bad idea! In the 19th century, Auguste Kerckhoffs laid down some design principles for military ciphers, but one in particular is still very relevant today, which is now known as Kerckhoffs’ principle:

The cipher method must not be required to be secret, and it must be able to fall into the hands of the enemy without inconvenience.

In other words, the security of a cryptosystem must not rely on the secrecy of its underlying algorithms; rather, the security should only rely on the secrecy of the key. That way only the key needs to be changed, and not the encryption or decryption algorithms (which are way more difficult to replace), as it is easier to change the key than to change the algorithm.

This principle is in stark contrast to the security by obscurity principle, where the security of the system is entirely dependent on the secrecy of the algorithms, and was pretty much the norm before modern cryptography. Fortunately, this principle fell out of favor when cryptographers started advocating for a more transparent approach in the cryptographic design process, so all those “proprietary” and “home-brewed” algorithms were made obsolete by their more open and “field-tested” counterparts. This is why cryptographers (the people who build) usually use the help of cryptanalysts (the people who break) in order to analyze the security of a construction. (Although cryptographers are often cryptanalysts themselves and vice versa.)

What is not cryptography?

Cryptography does not hide the fact that a secret message is being sent, and so it does not make the effort of hiding the entire communication channel. Doing so is called steganography.

Cryptography is not about making text or data “human-unreadable.” People use many techniques to try to “hide” information, namely by using encoding and decoding methods like Base64, hexadecimal, or binary strings.

| Plaintext: | this is not encrypted |

| Base64-encoded: | dGhpcyBpcyBub3QgZW5jcnlwdGVkCg== |

| Hex-encoded: | 74686973206973206e6f7420656e637279707465640a |

| Binary-encoded | 01110100 01101000 01101001 01110011 00100000 01101001 |

01110011 00100000 01101110 01101111 01110100 00100000 | |

01100101 01101110 01100011 01110010 01111001 01110000 | |

01110100 01100101 01100100 00001010 |

Such encoding methods are useful for representing binary data into a format that only supports a limited set of characters, and since it does not involve secret information (like a key), then it adds nothing in terms of security. These might look very different to humans, but to a computer, these are all the same. In short, writing something in Base64/hex/binary is not a security measure!

A word of warning (adapted from (Wong 2021))

The art of cryptography is difficult to master. It would be unwise to assume that you can build complex cryptographic protocols once you’re done with this course. This journey should enlighten you, show you what is possible, and show you how things work, but it will not make you a master of cryptography.

Do not go alone on a real adventure. Dragons can kill, and you need some support to accompany you in order to defeat them. In other words, cryptography is complicated, and this course does not permit you to abuse what you learn. To build complex systems, experts who have studied their trade for years are required. Instead, what you will learn is to recognize when cryptography should be used, or, if something seems fishy, what cryptographic primitives and protocols are available to solve the issues you’re facing, and how all these cryptographic algorithms work under the surface.

Principles of modern cryptography

Modern cryptography puts great emphasis on definitions, assumptions, and proofs.

Security definitions

Formal definitions of security are essential in the entire cryptographic design process. To put it simply,

If you don’t understand what you want to achieve, how can you possibly know when (or if) you have achieved it?

Formal definitions provide such understanding by giving a clear description of what threats are in scope and what security guarantees are desired.

In general, a security definition consists of:

-

a security guarantee that defines what the scheme is intended to prevent the attacker from doing, and

-

a threat model that describes the assumptions about the attacker’s (real-world) abilities.

Let’s consider what would a security definition look like for encryption.

Attempt 1: “It should be impossible for an attacker to recover the key.”

Suppose we have an encryption scheme where \(\Enc{k}{m} = m\). You can easily see that the resulting ciphertext does not leak the key in any way, and yet this scheme is blatantly insecure because the message is sent in the clear!

Thus we conclude that the inability to recover the key is necessary but not sufficient for security.

Attempt 2: “It should be impossible for an attacker to recover the plaintext from the ciphertext.”

A bit better, but a definition like this would also consider an encryption scheme that leaks 90% of the plaintext in the ciphertext to be secure. For real-life applications, especially those dealing with personal information, this encryption scheme would be problematic.

Attempt 3: “It should be impossible for an attacker to recover any character of the plaintext from the ciphertext.”

Close, but not good enough. This definition is “too weak” because it considers an encryption scheme secure if, for example, it reveals whether or not a person lives in a certain ZIP code area even if the full address is kept secret, which clearly we don’t want.

On the other hand, this definition is “too strong” because we cannot

rule out the chance that the attacker correctly guesses (through

sheer luck or prior information) that most letters always begin with

“Dear …” or that the last digit of your phone number is a 1. That

alone should not render an encryption scheme insecure, so the

definition should rule out those instances as successful attacks.

Attempt 4: “Regardless of any information an attacker already has, a ciphertext should leak no additional information about the underlying plaintext.”

This is the “right” approach as it addresses out previous concerns. All that’s missing is a precise mathematical formulation of this definition, which we’ll do later.

In the context of encryption, the different threat models are (sorted by increasing power of the attacker):

-

Ciphertext-only attack (COA)

The adversary observes one or more ciphertexts and attempts to determine information about the underlying plaintext(s).

-

Known-plaintext attack (KPA)

The adversary gets a hold of one or more plaintext-ciphertext pairs generated using some key, and then tries to deduce information about the underlying plaintext of some other ciphertext produced using the same key.

All historical ciphers are trivially broken by a known-plaintext attack (a notable example would be how the Enigma machine was broken).

-

Chosen-plaintext attack (CPA)

The adversary gets a hold of one or more plaintext-ciphertext pairs generated for plaintexts of their choice.

-

Chosen-ciphertext attack (CCA)

The adversary is able to obtain (at the very least, some information about) the decryption of ciphertexts of their choice, and then tries to deduce information about the underlying plaintext of some other ciphertext (whose decryption they cannot get a hold directly) produced using the same key.

Take note that none of them is better than the other, and the right one to use depends on the environment in which an encryption scheme is deployed and which captures the attacker’s true abilities.

Precise assumptions

Modern cryptography is like a “house of cards,” where proofs of security heavily rely on assumptions, most of them are unproven assumptions. This is because most modern cryptographic constructions cannot be proven secure unconditionally because proving them would entail proving one of many unsolved problems in the field of computational complexity theory (notably the \(\P\) vs. \(\NP\) problem). As such, modern cryptography requires any such assumptions to be made explicit and mathematically precise.

Proofs of security

Proofs of security give an iron-clad guarantee that no attacker will succeed, relative to the definition and assumptions. From experience, intuition is often misleading in cryptography, and can be outright disastrous. What we initially believe to be an “intuitively secure” scheme may actually be badly broken. For example, the Vigenère cipher was considered unbreakable for many centuries, until it was entirely broken in the 19th century.

References

- Wong, David. 2021. Real-World Cryptography. Manning Publications.

Perfect secrecy

Relevant readings: Katz and Lindell 2.1–2.3

The notion of perfect secrecy

We will now attempt to formalize our definition that we formulated in the previous lecture: “Regardless of information an attacker already has, a ciphertext should leak no additional information about the underlying plaintext.”

We first need to tweak our syntax of encryption defined from before by explicitly allowing the encryption algorithm to be randomized (meaning \(\Enc{k}{m}\) might output a different ciphertext when run multiple times), and we write \(c \mathrel{\hspace{1pt}\leftarrow\hspace{1pt}}\Enc{k}{m}\) to denote the possibly probabilistic process of encrypting \(m\) with key \(k\) to get \(c\). On the other hand, if is deterministic, we may write it as \(c :=\Enc{k}{m}\).

Under these changes, the invariant for correctness is now: $$\Pr\left[ \Dec{k}{\Enc{k}{m}} = m \right] = 1$$ for every key \(k \in \mathcal{K}\) and every message \(m \in \mathcal{M}\). This implies that we may assume \(\DEC\) is deterministic since it must give the same output every time it is run, thus we write \(m :=\Dec{k}{c}\) to denote the deterministic process of decrypting \(c\) with key \(k\) to get \(m\).

Let \(K\) be the random variable denoting the value of the key output by . We also let \(M\) be the random variable denoting the message being encrypted, so \(\Pr[M = m]\) denotes the probability that the message takes on the value \(m \in \mathcal{M}\).

Let’s say the enemy knows that Caesar likes to fight in the rain and it

is raining today, and the message will be either be stand by or

attack now. In fact, the enemy also knows that with probability

\(0.7\) the message will be a command to attack and with probability

\(0.3\) the message will be a command to stand by, so we have

$$\Pr[M = \texttt{attack now}] = 0.7 \text{ and } \Pr[M = \texttt{stand by}] = 0.3.$$

Now, suppose that Caesar sends \(c :=\Enc{k}{m}\) to his generals and the enemy has calculated the following: $$\Pr[M = \texttt{attack now} \mid C = c] = 0.8 \text{ and } \Pr[M = \texttt{stand by} \mid C = c] = 0.2.$$ Did the attacker learn anything useful?

Suppose an adversary knows the likelihood that different messages will be sent (in other words, the probability distribution of \(\mathcal{M}\)), and also the encryption scheme being used, but not the key. For a scheme to be perfectly secret, observing this ciphertext should have no effect on the adversary’s knowledge regarding the actual message that was sent. In other words, the adversary learns absolutely nothing about the plaintext that was encrypted.

[def:perf_sec] An encryption scheme \(\Pi = (\GEN{}, \ENC{}, \DEC{})\) with message space \(\mathcal{M}\) is perfectly secret if for every probability distribution over \(\mathcal{M}\), every message \(m \in \mathcal{M}\) and every ciphertext \(c \in \mathcal{C}\) for which \(\Pr[C = c] > 0\), $$\Pr[M = m \mid C = c] = \Pr[M = m].$$

Perfect indistinguishability

There is another equivalent definition of perfect secrecy, which relies on a game involving an adversary passively observing a ciphertext and then trying to guess which of two possible messages was encrypted.

Consider the following game:

-

An adversary \(\mathcal A\) first specifies two arbitrary messages \(m_{\texttt{0}}, m_{\texttt{1}} \in \mathcal M\).

-

A key \(k\) is generated using \(\GEN\).

-

One of the two messages specified by \(\mathcal A\) is chosen (each with probability \(1/2\)) and encrypted using \(k\); the resulting ciphertext is given to \(\mathcal A\).

-

\(\mathcal A\) outputs a “guess” as to which of the two messages was encrypted.

-

Then \(\mathcal A\) wins the game if it guesses correctly.

Then we say that an encryption scheme is perfectly indistinguishable if no adversary \(\mathcal A\) can succeed with probability better than \(1/2\). Note that, for any encryption scheme, \(\mathcal A\) can succeed with probability \(1/2\) by outputting a uniform guess; the requirement is simply that no attacker can do any better than that.

More formally, for an encryption scheme \(\Pi = (\GEN, \ENC, \DEC)\) we define the IND game (which is the same game described above) as follows:

-

The adversary \(\mathcal A\) outputs a pair of messages \(m_{\texttt{0}}, m_{\texttt{1}} \in \mathcal M\).

-

A key \(k\) is generated using , and a uniform bit \(b \in \Set{\texttt{0}, \texttt{1}}\) is chosen. A ciphertext \(c \mathrel{\hspace{1pt}\leftarrow\hspace{1pt}}\Enc{k}{m_b}\) is computed and given to \(\mathcal A\). We refer to \(c\) as the challenge ciphertext.

-

\(\mathcal A\) outputs a bit \(b^\prime\).

-

\(\mathcal A\) wins if \(b^\prime = b\).

[def:perf_ind] An encryption scheme \(\Pi = (\GEN, \ENC, \DEC)\) is perfectly indistinguishable if for every adversary \(\mathcal{A}\), $$\Pr\left[\text{$\mathcal{A}$ wins the IND game}\right] = \tfrac{1}{2}.$$

The following lemma states that Definition [def:perf_ind] is equivalent to Definition [def:perf_sec], but we won’t prove it here:

An encryption scheme \(\Pi\) is perfectly secret if and only if it is perfectly indistinguishable.

One-time pad

The one-time pad (OTP) is an encryption technique created by Gilbert Vernam in 1919. At the time of invention, it was not yet proven that it was perfectly secret until about 25 years later when Claude Shannon introduced the definition of perfect secrecy and demonstrated that the one-time pad satisfied that definition.

Let \(a \oplus b\) be the bitwise exclusive-or (XOR) of two binary strings \(a\) and \(b\) of equal length, so if \(a = a_1\cdots a_n\) and \(b_1 \cdots b_n\) are both \(n\)-bit strings, then \(a \oplus b\) is another \(n\)-bit string given by $$a \oplus b = \left(a_1 \oplus b_1\right)\cdots \left(a_n \oplus b_n\right).$$ Here, we use the variable \(n\) to denote the length of a secret key in an encryption scheme. We can use the following properties of the XOR operation to make our lives easier: $$\begin{align} x \oplus x &= \texttt{000}\cdots & \text{XOR'ing a string with itself results in zeroes} \\ x \oplus \texttt{000}\cdots &= x & \text{XOR'ing with zeroes has no effect} \\ x \oplus \texttt{111}\cdots &= \neg x & \text{XOR'ing with ones flips every bit} \\ x \oplus y &= y \oplus x & \text{XOR is commutative} \\ (x \oplus y) \oplus z &= x \oplus (y \oplus z) & \text{XOR is associative}\end{align}$$

The corresponding algorithms for the one-time pad are defined as follows:

-

\( \GEN \): Given \(n\), pick a random \(k \getsrandom \Set{\texttt{0}, \texttt{1}}^n\), then return \(k\).

-

\( \ENC \): Given \(m\) and \(k\), return \(k \oplus m\).

-

\( \DEC \): Given \(c\) and \(k\), return \(k \oplus c\).

Recall that \(k \mathrel{\hspace{1pt}\leftarrow\hspace{1pt}}\Set{\texttt{0}, \texttt{1}}^n\) means that a random \(n\)-bit string is chosen uniformly from the set, so each key is chosen with probability \(2^{-n}\).

Proof. Simply substitute the definitions of and and use the properties mentioned above. For all \(k, m \in \Set{\texttt{0}, \texttt{1}}^n\), we have $$\begin{align} \Dec{k}{\Enc{k}{m}} &= \Dec{k}{k \oplus m} \\ &= k \oplus (k \oplus m) \\ &= (k \oplus k) \oplus m \\ &= \texttt{0}^n \oplus m \\ &= m. \end{align}$$ ◻

Using Definition [def:perf_sec], we now try to show that the one-time pad is perfectly secret:

Proof. We first compute \(\Pr[C = c \mid M = m]\) for arbitrary \(c \in \mathcal{C}\) and \(m \in \mathcal{M}\) with \(\Pr[M = m] > 0\). For the one-time pad, we have $$\begin{align} \Pr[C = c \mid M = m] &= \Pr[K \oplus m = c \mid M = m] & \text{(by definition)} \\ &= \Pr[K = m \oplus c \mid M = m] \\ &= 2^{-n}. \end{align}$$ where the final equality holds because \(K\) is a uniform \(n\)-bit string independent of \(M\). Fix any distribution over \(\mathcal{M}\). Then for any \(c \in \mathcal{C}\), we have $$\begin{align} \Pr[C = c] &= \sum_{m \in \mathcal{M}} \Pr[C = c \mid M = m] \cdot \Pr[M = m] & \text{(law of total probability)} \\ &= 2^{-n} \cdot \sum_{m \in \mathcal{M}} \Pr[M = m] & \text{(from above result)} \\ &= 2^{-n} & \text{(second axiom of probability)} \end{align}$$ where \(\Pr[M = m] \ne 0\). Bayes’ theorem gives us: $$\begin{align} \Pr[M = m \mid C = c] &= \frac{\Pr[C = c \mid M = m] \cdot \Pr[M = m]}{\Pr[C = c]} \\ &= \frac{2^{-n} \cdot \Pr[M = m]}{2^{-n}} \\ &= \Pr[M = m]. \end{align}$$ Therefore the one-time pad is perfectly secret. ◻

Although the one-time pad is perfectly secret, it is rarely used for practical applications, one of main reasons being the key should be as long as the message. This is a problem when sending very long messages, especially when it cannot be predicted in advance how long the message will be.

As the name suggests, the one-time pad is only be secure if used once. Let’s see what happens if two messages \(m, m^\prime\) are encrypted with the same key \(k\). Then Eve upon obtaining \(c = k \oplus m\) and \(c^\prime = k \oplus m^\prime\) can compute $$c \oplus c^\prime = (k \oplus m) \oplus (k \oplus m^\prime) = m \oplus m^\prime$$ and from there, Eve can learn where the two messages differ.

Limitations of perfect secrecy

The drawbacks of the one-time pad are not due to the one-time pad itself, but are inherent limitations of perfect secrecy. It can be proven that any perfectly secret encryption scheme must have a key space that is at least as large as the message space.

Number theory basics

Relevant readings: Lehman, et al. 9.1–9.4, 9.6–9.7, 9.9–9.10

Note: We’ll take the proofs of the results here for granted in this course, but you can check the reference above for the full proofs if you’re interested.

Aged like milk…

Put simply, number theory is the study of the integers. Why? What’s with \(10\) or \(1\) or \(-4000\) that you don’t understand? For this reason many people (and even mathematicians) back then thought that number theory is a useless field, and also because it was “well-detached” from other fields of math. But the famous number theorist G. H. Hardy delights from the fact that it was useless and impractical:

[Number theorists] may be justified in rejoicing that there is one science, at any rate, and that their own, whose very remoteness from ordinary human activities should keep it gentle and clean.

And so he confidently concluded that:

Real mathematics has no effects on war. No one has yet discovered any warlike purpose to be served by the theory of numbers or relativity, and it seems unlikely that anyone will do so for many years.

To give some context, Hardy wrote his essay A Mathematician’s Apology (where these quotes came from) in 1940. A few years later, the Manhattan Project was formed, doing research and development on nuclear weapons for which breakthroughs in quantum mechanics and relativity had made them possible. About thirty years later, public-key cryptography (and modern cryptography as a whole) was born, which makes secure communication possible. If anything, there is a “warlike purpose” for secure communication, and in fact that’s critical in war. (Do you see now why the NSA hires mathematicians?) Today, cryptosystems based on large prime numbers help make the Internet secure.

As number theory underlies modern cryptography, Hardy’s claim aged spectacularly like milk, leaving him spinning in his grave…

Divisibility

We say that \(a\) divides \(b\),1 denoted by \(a \mid b\), iff there is an integer \(k\) such that $$ak = b.$$ This implies that for all \(n\), the following statements hold true:

-

\(n \mid 0\)

-

\(n \mid n\)

-

\(\pm 1 \mid n\), and

-

\(0 \mid n\) implies \(n=0\).

Facts about divisibility

We present here some basic facts about divisibility.

Let \(a, b, c\) be integers. We have:

-

If \(a \mid b\) and \(b \mid c\), then \(a \mid c\).

-

If \(a \mid b\) and \(a \mid c\), then \(a \mid sb + tc\) for all \(s\) and \(t\).

-

For all \(c \ne 0\), \(a \mid b\) if and only if \(ca \mid cb\).

Euclidean division

Recall from your grade school math that when a number does not cleanly divide another, a remainder is left over. We can state this formally as follows:

Let \(n\) and \(d\) be integers such that \(d \ne 0\). Then there exists a unique pair of integers \(q\) and \(r\), such that $$n = q \cdot d + r \quad\text{and}\quad 0 \le r < \left|d\right|.$$ Here, \(q\) is the quotient and \(r\) is the remainder.

The remainder operator

For any integer \(a\) and \(b\), the remainder when \(a\) is divided by \(b\) is denoted by \(a \bmod b\): $$a \bmod b = a - \left\lfloor a/b \right\rfloor \cdot b$$ where \(\lfloor a/b \rfloor\) is the (integer) quotient of \(a\) and \(b\).

Due to the division theorem, the remainder is guaranteed to be nonnegative regardless of the sign of \(a\) and \(b\). So \(-10 \bmod 3 = 2\), since \(-10 = (-4)\cdot 3 + 2\).

It’s quite unfortunate that different programming languages treat remainder operations inconsistently. For example, C, C++, and Java follow the sign of the first argument, so the expression would evaluate to \(-1\), while Python follows the sign of the second argument, so the expression would correctly evaluate to \(2\), but outputs \(-1\) instead. Always remember that remainders are nonnegative!

Greatest common divisors

The greatest common divisor of two integers \(a\) and \(b\), denoted by \(\gcd(a, b)\), is the largest integer that divides them both. If \(\gcd(a, b) = 1\), then we say that \(a\) and \(b\) are relatively prime or coprime.

Based from our definition, these statements are true: $$\begin{align} \gcd(n, 1) &= 1 \\ \gcd(n, n) &= \gcd(n, 0) = \left| n \right| \quad\text{for $n \ne 0$}.\end{align}$$

-

\(\gcd(69, 420) = 3\) since \(3\) is the largest integer that divides both \(69\) and \(420\).

-

\(\gcd(9, 22) = 1\) since \(1\) is the largest integer that divides both \(9\) and \(22\). As such, \(9\) and \(22\) are relatively prime.

Euclid’s algorithm

How do we find gcd’s? One way is to use an algorithm due to Euclid, which is based on the following observation.

In other words, we can then recursively define \(\gcd(a, b)\) as $$\gcd(a, b) = \begin{cases} a & \text{if $b = 0$}, \\ \gcd(b, a \bmod b) & \text{otherwise}. \end{cases}$$

We could compute the greatest common divisor of \(1147\) and \(899\) by repeatedly applying the above definition: $$\begin{align} \gcd(1147, 899) &= \gcd(899, 1147 \bmod 899) = \gcd(899, 248) \\ &= \gcd(248, 899 \bmod 248) = \gcd(248, 155) \\ &= \gcd(155, 248 \bmod 155) = \gcd(155, 93) \\ &= \gcd(93, 155 \bmod 93) = \gcd(93, 62) \\ &= \gcd(62, 93 \bmod 62) = \gcd(93, 31) \\ &= \gcd(31, 62 \bmod 31) = \gcd(31, 0) \\ &= 31. \end{align}$$

Extended Euclidean algorithm

The greatest common divisor of \(a\) and \(b\) is a linear combination of \(a\) and \(b\). That is, $$\gcd(a, b) = sa + tb$$ for some integers \(s\) and \(t\).

To find \(s\) and \(t\), we follow Euclid’s algorithm as usual, but we’ll do some extra bookkeeping. More specifically we keep track of how to write each of the remainders as a linear combination of \(a\) and \(b\).

We can compute the gcd of \(420\) and \(96\) with the “additional bookkeeping” as follows:

| \(x\) | \(y\) | \(x \bmod y\) | \(=\) | \(x - \lfloor x/y \rfloor \cdot y\) |

|---|---|---|---|---|

| \(420\) | \(96\) | \(36\) | \(=\) | \(420 - 4 \cdot 96\) |

| \(96\) | \(36\) | \(24\) | \(=\) | \(96 - 2 \cdot 36\) |

| \(=\) | \(96 - 2 \cdot (420 - 4 \cdot 96)\) | |||

| \(=\) | \(-2 \cdot 420 + 9 \cdot 96\) | |||

| \(36\) | \(24\) | \(12\) | \(=\) | \(36 - 1 \cdot 24\) |

| \(=\) | \((420 - 4 \cdot 96) - 1 \cdot (-2 \cdot 420 + 9 \cdot 96)\) | |||

| \(=\) | \(\boxed{3 \cdot 420 - 13 \cdot 96}\) | |||

| \(24\) | \(12\) | \(0\) |

Along the way, we computed the remainder \(x \bmod y\). Then in this linear combination of \(x\) and \(y\), we rewrite \(x\) and \(y\) in terms of \(420\) and \(96\) using our previous computations.

The last nonzero remainder is the gcd, which in this case is \(12\), and we can write it as a linear combination of \(420\) and \(96\): \(12 = 3 \cdot 420 - 13 \cdot 96\).

Prime numbers

A prime is a number greater than \(1\) that is divisible only by itself and \(1\). A number other than \(0\), \(1\), and \(-1\) that is not a prime is called composite.

Counting primes

The distribution of prime numbers seem almost random, but one of the great insights about primes is that their density among the integers has a precise limit.

Let \(\pi(n)\) denote the number of primes up to \(n\), so $$\pi(n) = \Len{\Set{p \in \Set{2, \ldots, n} \mid \text{$p$ is prime}}}.$$

Though the spacing between consecutive primes seem erratic, it eventually smooths out to be the same as the growth of the function \(n / \ln n\).

It’s a surprise tool that will help us later, when we discuss about algorithms for factoring integers.

Fundamental theorem of arithmetic

An important fact you should remember is that every positive integer has a unique prime factorization, meaning every positive integer can be constructed from primes in exactly one way (ignoring the order of the factors).

More concretely, a positive integer \(n\) can be written as $$n = p_1^{e_1} p_2^{e_2} \cdots p_k^{e_k}$$ where \(p_1, p_2, \ldots, p_k\) are unique primes. We will call this as the prime factorization of \(n\).

This is one of the reasons why \(1\) is not considered as a prime, otherwise unique factorization wouldn’t hold anymore for integers.

Modular arithmetic

Modular arithmetic operates like a clock, where it only works within a finite set of integers and numbers “wrap around” at a certain number.

Congruences

If \(a\) and \(b\) both have the same remainder when divided by \(n\), then we say that \(a\) and \(b\) are congruent modulo \(n\), usually written as \(a \equiv b \pmod{n}\). That is: $$a \bmod n = b \bmod n \quad\text{implies}\quad a \equiv b \pmod{n}.$$ The converse is also true, but we won’t prove it here.

We’ll also make use of the following lemma which would be helpful when we deal with arithmetic modulo \(n\):

Doing arithmetic modulo \(n\) [doing-arithmetic-modulo-n]

One of the main motivations behind doing arithmetic modulo \(n\) is

due to the limitation of computers. For example in most programming

languages, an int can only handle numbers up to \(2^{31}-1\) (I

think), thus an overflow would be inevitable if we start working with

very large numbers. One way to avoid integer overflow is to take

remainders at every step so that the entire computation only involves

number in the range, namely \(\Set{0, 1, \ldots, n-1}\).

Suppose we want to determine the tens digit of \(2^{2022} + 42069\). The sure-fire way to find would be to evaluate the whole expression, but we would risk overflowing if we were to store this as an integer data type since this has at least \(600\) digits. Instead, we can get the remainder of that whole thing when divided by \(100\).

The remainder when \(42069\) is divided by \(100\) is \(69\). It would be tempting to directly compute \(2^{2022}\) and get the remainder, but don’t. Let’s try to look for a pattern. $$\begin{array}{r|cccccccccccccc} n & 1 & 2 & 3 & 4 & 5 & 6 & 7 & 8 & 9 & 10 & 11 & 12 & 13 & 14 \\ \hline 2^n \bmod 100 & 2 & 4 & 8 & 16 & 32 & 64 & 28 & 56 & 12 & 24 & 48 & 96 & 92 & 84 \end{array}$$ $$\begin{array}{r|ccccccccccccccc} n & 15 & 16 & 17 & 18 & 19 & 20 & 21 &22 & 23 & 24 & 25 & 26 & \cdots \\ \hline 2^n \bmod 100 & 68 & 36 & 72 & 44 & 88 & 76 & 52 & 4 & 8 & 16 & 32 & 64 & \cdots \end{array}$$ We can see that after \(2^{21} \bmod 100 = 52\), it repeats back to \(4, 8, 16, \ldots\), thus we have a “cycle” of \(20\) numbers. Since \(2022 = 101 \cdot 20 + 2\), we have: $$\begin{align} &\left(2^{2022} + 42069\right) \bmod 100 \\ &= \left(2^{2022} \bmod 100 + 42069 \bmod 100\right) \bmod 100 \\ &= \left(\left(2^{101 \cdot 20 + 2}\right) \bmod 100 + 69\right) \bmod 100 \\ &= \left(\left(2^{2020} \cdot 4 \right) \bmod 100 + 69\right) \bmod 100 \\ &= \left(\left(2^{2020} \bmod 100 \cdot 4 \bmod 100\right) + 69\right) \bmod 100 \\ &= \left(\left(2^{20} \bmod 100 \cdot 4 \bmod 100\right) + 69\right) \bmod 100 \\ &= \left(\left(76 \cdot 4\right) + 69\right) \bmod 100 \\ &= 373 \bmod 100 \\ &= 73. \end{align}$$

Since \(\left(2^{2022} + 42069\right) \bmod 100 = 73\), the tens digit is \(7\).

Introducing \(\ZZ_n\)

Why does that work? Let’s introduce some new notation, namely \(+_n\) for doing an addition and then immediately taking a remainder on division by \(n\), and similarly \(\cdot_n\) for multiplication: $$\begin{align} i +_n j &= \left(i + j\right) \bmod n \\ i \cdot_n j &= \left(i j\right) \bmod n\end{align}$$

To be able to add and multiply within integers modulo \(n\), we repeatedly apply the following lemma:

$$\begin{align} \left(i + j\right) \bmod n &= (i \bmod n) +_n (j \bmod n) \\ \left(i j\right) \bmod n &= (i \bmod n) \cdot_n (j \bmod n) \end{align}$$

The set of integers \(\Set{0, 1, \ldots, n-1}\), together with the operations \(+_n\) and \(\cdot_n\) is referred to as the ring of integers modulo \(n\), denoted by \(\ZZ_n\).2 To make things tidier, we just denote the operations as \(+\) and \(\cdot\) if \(n\) is implied from context.

It turns out that all the familiar rules of arithmetic also hold in \(\ZZ_n\); in particular these are true in \(\ZZ_n\):

-

associativity of \(\cdot\) and \(+\)

\((i \cdot j) \cdot k = i \cdot (j \cdot k)\) and \((i + j) + k = i + (j + k)\)

-

identity for \(\cdot\) and \(+\)

\(1 \cdot k = k \cdot 1 = k\) and \(0 + k = k + 0 = k\)

-

inverse for \(+\)

\(k + (-k) = 0\)

-

commutativity of \(\cdot\) and \(+\)

\(i \cdot j = j \cdot i\) and \(i + j = j + i\)

-

distributivity

\(i \cdot (j + k) = (i \cdot j) + (i \cdot k)\)

Multiplicative inverses

In regular arithmetic, we use division to “undo” a multiplication, so in this sense multiplication and division are inverses of each other. The multiplicative inverse of a number (say \(x\)) is another number \(x^{-1}\) such that $$x \cdot x^{-1} = 1.$$

Over \(\QQ\), the set of rational numbers, every rational number (except \(0\)) of the form \(a/b\) has an inverse \(b/a\). On the other hand, only \(1\) and \(-1\) have inverses over the integers. Over the ring \(\ZZ_n\), it’s a bit more complicated.

\(2\) is a multiplicative inverse of \(8\) in \(\ZZ_{15}\), since $$2 \cdot 8 = 1 \quad\text{(in $\ZZ_{15}$)}.$$

But \(3\) does not have a multiplicative inverse in \(\ZZ_{15}\). By contradiction, let’s say there was an inverse \(y\) for \(3\), so $$1 = 3 \cdot y \quad\text{(in $\ZZ_{15}$)}.$$ Then multiplying both sides by \(5\) we have $$\begin{align} 5 &= 5 \cdot (3 \cdot y) \\ &= (5 \cdot 3) \cdot y \\ &= 0 \cdot y \\ &= 0 \quad\text{(in $\ZZ_{15}$)} \end{align}$$ which leads to a contradiction, thus there can’t be any such inverse \(y\).

Finding inverses

The proof to the following theorem conveniently shows us how to compute for the inverse.

If \(k \in \ZZ_n\) is relatively prime to \(n\), then \(k\) has an inverse in \(\ZZ_n\).3

Proof. If \(k\) is relatively prime to \(n\), this means \(\gcd(n, k) = 1\). We can use the extended Euclidean algorithm to express \(1\) as a linear combination of \(n\) and \(k\): $$sn + tk = 1.$$ Working over \(\ZZ_n\), the equation becomes $$(s \bmod n \cdot n \bmod n) + (t \bmod n \cdot k \bmod n) = 1 \quad\text{(in $\ZZ_n$)}.$$ But \(n \bmod n = 0\) and \(k \bmod n = k\) since \(k \in \ZZ_n\), so we get $$t \bmod n \cdot k = 1 \quad\text{(in $\ZZ_n$)}.$$ Thus \(t \bmod n\) is a multiplicative inverse of \(k\). ◻

In fact, when we’re able to find an inverse, it is the only inverse for that number; in other words, inverses are unique when they exist.

Suppose we want to find the inverse of \(18\) in \(\ZZ_{101}\). Using the extended Euclidean algorithm:

| \(x\) | \(y\) | \(x \bmod y\) | \(=\) | \(x - \lfloor x/y \rfloor \cdot y\) |

|---|---|---|---|---|

| \(101\) | \(18\) | \(11\) | \(=\) | \(101 - 5 \cdot 18\) |

| \(18\) | \(11\) | \(7\) | \(=\) | \(18 - 1 \cdot 11\) |

| \(=\) | \(18 - 1 \cdot (101 - 5 \cdot 18)\) | |||

| \(=\) | \(- 1 \cdot 101 + 6 \cdot 18\) | |||

| \(11\) | \(7\) | \(4\) | \(=\) | \(11 - 1 \cdot 7\) |

| \(=\) | \((101 - 5 \cdot 18) - 1 \cdot (- 1 \cdot 101 + 6 \cdot 18)\) | |||

| \(=\) | \(2 \cdot 101 - 11 \cdot 18\) | |||

| \(7\) | \(4\) | \(3\) | \(=\) | \(7 - 1 \cdot 4\) |

| \(=\) | \((- 1 \cdot 101 + 6 \cdot 18) - 1 \cdot (2 \cdot 101 - 11 \cdot 18)\) | |||

| \(=\) | \(-3 \cdot 101 + 17 \cdot 18\) | |||

| \(4\) | \(3\) | \(1\) | \(=\) | \(4 - 1 \cdot 3\) |

| \(=\) | \((2 \cdot 101 - 11 \cdot 18) - 1 \cdot (-3 \cdot 101 + 17 \cdot 18)\) | |||

| \(=\) | \(\boxed{5 \cdot 101 - 28 \cdot 18}\) | |||

| \(3\) | \(1\) | \(0\) |

Since \(1 = 5 \cdot 101 - 28 \cdot 18\), the inverse of \(18\) in \(\ZZ_{101}\) is \(-28 \bmod 101 = 73\).

Chinese remainder theorem

Suppose I have a number that leaves a remainder of \(1\) when divided by \(3\), \(4\) when divided by \(5\), and \(6\) when divided by \(7\). What is my number?

An easy but naïve solution is to make a table of values of \(n \bmod 3\), \(n \bmod 5\), and \(n \bmod 7\) for each \(n = 0, \ldots, 104\). Then we try to find the value of \(n\) such that \(n \bmod 3 = 1\), \(n \bmod 5 = 4\), and \(n \bmod 7 = 6\). $$\begin{array}{lccccccccccccccc} n & 0 & 1 & 2 & 3 & 4 & 5 & 6 & 7 & 8 & 9 & 10 & \cdots & 33 & \mathbf{34} & \cdots \\ \hline n \bmod 3 & 0 & 1 & 2 & 0 & 1 & 2 & 0 & 1 & 2 & 0 & 1 & \cdots & 0 & \mathbf{1} & \cdots \\ n \bmod 5 & 0 & 1 & 2 & 3 & 4 & 0 & 1 & 2 & 3 & 4 & 0 & \cdots & 3 & \mathbf{4} & \cdots \\ n \bmod 7 & 0 & 1 & 2 & 3 & 4 & 5 & 6 & 0 & 1 & 2 & 3 & \cdots & 5 & \mathbf{6} & \cdots \end{array}$$ Eventually we will find out that \(n = 34\) satisfies the above conditions. Thus \(34\) is my number.

However, this brute-force approach wouldn’t scale well when the moduli get very large. Here’s a better way:

Let \(m_1, \ldots, m_k\) be pairwise relatively prime, meaning for each pair of distinct \(i, j\) we have \(\gcd\left(m_i, m_j\right) = 1\). Then the system of congurences $$\begin{align} x &\equiv a_1 \pmod{m_1} \\ \phantom{x} &\vdots \\ x &\equiv a_k \pmod{m_k} \end{align}$$ has a solution for \(x\) modulo \(M = m_1 \cdots m_k\).

Proof sketch. For all \(i\), let \(y_i = M/m_i\) be the product of all the moduli except \(m_i\), and \(y_i^\prime\) be the inverse of \(y_i\) modulo \(m_i\). Then $$x = \sum_{i=1}^k a_i y_i y_i^\prime \quad\text{(in $\ZZ_M$)}$$ is the unique solution. ◻

Let’s use the same “guess my number” problem above, but this time we’ll use the Chinese remainder theorem. Essentially, the problem boils down to finding a solution to the system of congruences: $$\begin{align} x &\equiv 1 \pmod{3} \\ x &\equiv 4 \pmod{5} \\ x &\equiv 6 \pmod{7} \end{align}$$

To find the unique solution \(x\), we need to calculate for \(y_i\) and \(y_i^\prime\), which are $$\begin{align} y_1 &= 5 \cdot 7 = 35, &y_1^\prime &= 35^{-1} = 2 \quad\text{(in $\ZZ_3$)}, \\ y_2 &= 3 \cdot 7 = 21, &y_2^\prime &= 21^{-1} = 1 \quad\text{(in $\ZZ_5$)}, \\ y_3 &= 3 \cdot 5 = 15, &y_3^\prime &= 15^{-1} = 1 \quad\text{(in $\ZZ_7$)}. \end{align}$$ We now have $$\begin{align} x &= a_1 y_1 y_1^\prime + a_2 y_2 y_2^\prime + a_3 y_3 y_3^\prime \\ &= (1 \cdot 35 \cdot 2) + (4 \cdot 21 \cdot 1) + (6 \cdot 15 \cdot 1) \\ &= 70 + 84 + 90 \\ &= 244 = 34 \quad\text{(in $\ZZ_{105}$)}. \end{align}$$ Thus we also get the same result as the brute-force method.

Euler’s theorem

In this section, we will explore some shortcuts to compute remainders of numbers raised to very large powers. These will come in handy for public-key cryptosystems like RSA.

For \(n > 0\), define $$\phi(n) = \text{the number of integers in $\Set{0, \ldots, n-1}$ that are relatively prime to $n$}.$$

For example, \(\phi(11) = 10\) because all \(10\) positive numbers in \(\Set{0, \ldots, 10}\) are relatively prime to \(11\) except for \(0\), while \(\phi(12) = 4\) because only \(1\), \(5\), \(7\), and \(11\) are the only numbers in \(\Set{0, 1, \ldots, 11}\) that are relatively prime to \(12\). If \(p\) is prime, then \(\phi(p) = p-1\) since every positive number in \(\Set{0, \ldots, p-1}\) is relatively prime to \(p\).

If \(n\) and \(k\) are relatively prime, then $$k^{\phi(n)} \equiv 1 \pmod{n}.$$

If \(n\) happens to be a prime and since \(\phi(p) = p-1\) for prime \(p\), then we arrive at a special case called Fermat’s little theorem:

Suppose \(p\) is a prime and \(k\) is not a multiple of \(p\). Then $$k^{p-1} \equiv 1 \pmod{n}.$$

These theorems provide another way of computing inverses. Working in \(\ZZ_n\), we restate Euler’s theorem as:

If \(n\) and \(k\) are relatively prime, then $$k^{\phi(n)} = 1 \quad\text{(in $\ZZ_n$)}.$$

Dividing both sides by \(k\) (i.e., multiplying both sides by \(k^{-1}\)), we get $$k^{-1} = k^{\phi(n)} \cdot k^{-1} = k^{\phi(n)-1} \quad\text{(in $\ZZ_n$)}.$$ When \(n\) is prime, then it’s even more straightforward, as we only have $$k^{-1} = k^{(n-1)-1} = k^{n-2} \quad\text{(in $\ZZ_n$)}.$$

As before, suppose we want to find the inverse of \(18\) in \(\ZZ_{101}\). Since \(101\) is prime, we can just use the simpler formula: $$\begin{align} 18^{-1} &= 18^{101-2} \\ &= 18^{99} \\ &= 73 \quad\text{(in $\ZZ_{101}$)}. \end{align}$$

Computing Euler’s \(\phi\) function

RSA deals with arithmetic modulo the product of two large primes.4 So the following lemma shows how to compute \(\phi(pq)\) where \(p\) and \(q\) are distinct primes:

Now for the main result: the following theorem shows how to compute \(\phi(n)\) for any \(n\).

-

If \(p\) is a prime, then $$\phi\left(p^k\right) = p^k \left(1 - \frac{1}{p}\right) = p^k - p^{k-1}$$ for \(k \ge 1\).

-

If \(a\) and \(b\) are relatively prime, then \(\phi(ab) = \phi(a) \phi(b)\).

$$\begin{align} \phi(420) &= \phi(2^2 \cdot 3 \cdot 5 \cdot 7) \\ &= \phi(2^2) \cdot \phi(3) \cdot \phi(5) \cdot \phi(7) \\ &= \left(2^2 - 2^1\right) \left(3^1 - 3^0\right) \left(5^1 - 5^0\right) \left(7^1 - 7^0\right) \\ &= 96. \end{align}$$

The consequence of the main result is that we can compute \(\phi(n)\) using the prime factorization of \(n\):

For any number \(n\), if \(p_1, p_2, \cdots, p_k\) are the (distinct) prime factors of \(n\), then $$\phi(n) = n \left(1 - \frac{1}{p_1}\right) \left(1 - \frac{1}{p_2}\right) \cdots \left(1 - \frac{1}{p_k}\right).$$

We can also say \(a\) is a divisor of \(b\), or \(a\) is a factor of \(b\), or \(b\) is divisible by \(a\), or \(b\) is a multiple of \(a\). Take your pick.

We’ll formally define what rings are in the near future.

Finding an inverse “in \(\ZZ_n\)” is the same as finding an inverse “modulo \(n\).”

We call a number that is a product of two primes as semiprime.

Algebraic structures

Relevant readings: (Menezes, Oorschot, and Vanstone 1996) 2.5–2.6, (Hoffstein, Pipher, and Silverman 2014) 2.10

Note: We’ll take the proofs of the results here for granted in this course, but you can check the reference above for the full proofs if you’re interested.

First of all, why?

To put it simply, an algebraic structure is a set with additional features. For example, if you have a set of nonnegative integers less than \(n\) and slap it with addition and multiplication operations along with a bunch of properties, then you’ve got \(\ZZ_n\), the ring of integers modulo \(n\).

Algebraic structures are prominent in modern cryptography, where public-key cryptosystems like RSA work with rings of integers modulo a large semiprime, Diffie–Hellman key exchange works with finite cyclic groups, and symmetric-key primitives like AES and RC4 uses finite fields. Modern cryptography also relies on (yet proven) assumptions about these algebraic structures, particularly about the inefficiency of factoring integers and taking discrete logarithms.

Groups

A group \((G, \cdot)\) is a set \(G\) together with a binary operation \(\cdot\), such that these properties hold:

-

Closure: If \(x, y \in G\), then \(x \cdot y \in G\).

-

Associativity: For all \(x, y, z \in G\), \((x \cdot y) \cdot z = x \cdot (y \cdot z)\).

-

Identity: There exists an element \(e \in G\) (called the identity element), such that \(x \cdot e = e \cdot x = x\) for all \(x \in G\).

-

Inverses: For each \(x \in G\), there exists a unique element \(y \in G\) (called the inverse of \(x\)), such that \(x \cdot y = y \cdot x = e\).

If \((G, \cdot)\) additionally satisifies the commutative property, i.e., for all \(x, y \in G\) we have \(x \cdot y = y \cdot x\), then it is an abelian group.

Some might call it an abuse of notation, but we usually refer to the group \((G, \cdot)\) by its underlying set \(G\) instead, and we’ll do so for convenience.

A group \(G\) is finite if \(\Len{G}\) is finite. The number of elements in a finite group is called its order.

-

The set of integers under addition forms a group.

-

The set \(\ZZ_n\) under addition does not form a group. On the other hand, \(\ZZ_n\) under multiplication does form a group.

Subgroups and cyclic groups

If \(H\) is a non-empty subset of \(G\) and \((H, \cdot)\) is also a group, then \(H\) is a subgroup of \(G\), usually denoted by \(H \le G\). Furthermore, if \(H \le G\) and \(H \ne G\), then \(H\) is a proper subgroup of \(G\).

A group \(G\) is cyclic if there is an element \(g \in G\) such that for all \(x \in G\) there is an integer \(k\) with \(x = g^k\). Such an element \(g\) is called a generator of \(G\).

If \(G\) is a group and \(a \in G\), then the set of all powers of \(a\) forms a cyclic subgroup of \(G\), called the subgroup generated by \(a\), denoted by \(\gen{a}\).

Let \(G\) be a group and \(a \in G\). The order of \(a\) is the smallest positive integer \(k\) such that \(a^k = e\), provided that such an integer exists. If not, then the order of \(a\) is defined to be \(\infty\).

If \(G\) is a finite group and \(H\) is a subgroup of \(G\), then \(\Len{H}\) divides \(\Len{G}\). Hence, if \(a \in G\), the order of \(a\) divides \(\Len{G}\).

Powers, primitive roots, and discrete logarithms

Let \(G\) be any group. Then for any \(x \in G\) and positive integer \(k\), the expression \(x^k\) is the product of \(x\) with itself \(k\) times: $$x^k = \underbrace{x \cdot x \cdots x}_{\text{$k$ times}}.$$ If \(k = 0\), then \(x^0 = e\).

Let’s consider \(\ZZ_n^\ast\), the set of all positive integers less than \(n\) that are relatively prime to \(n\), defined as follows: $$\ZZ_n^\ast = \Set{a \in \ZZ_n \mid \gcd(a, n) = 1}.$$ The definition also implies $$\Len{\ZZ_n^\ast} = \phi(n),$$ which is the order of \(\ZZ_n^\ast\).

\(\ZZ_n^\ast\) forms a group under multiplication, so we also call \(\ZZ_n^\ast\) the multiplicative group of integers modulo \(n\).

Let \(g \in \ZZ_n^\ast\). If the order of \(g\) is \(\phi(n)\), then \(g\) is a generator or a primitive element of \(\ZZ_n^\ast\). Thus \(\ZZ_n^\ast\) is cyclic if it has a generator.

Suppose \(G\) is a finite cyclic group of order \(n\). Let \(g\) be a generator of \(G\), and let \(h \in G\). The discrete logarithm of \(h\) to the base \(g\), denoted \(\log_g h\), is the unique integer \(x\) (where \(0 \le x \le n-1\)) such that \(g^x = h\).

Consider the group \(\ZZ_{17}^\ast\). The discrete logarithm of \(13\) with respect to base \(3\) is \(4\), because $$3^4 = 81 = 13 \quad\text{in $\ZZ_{17}^\ast$}.$$ As such we denote this as \(\log_3 13 = 4\).

Notice that taking the discrete logarithm is the inverse operation of modular exponentiation, in the same way logarithms and exponentiation are inverses in the \(\RR\) world, but you should also note that the discrete logarithm bears little resemblance to the continuous logarithm defined on the real or complex numbers.

Rings

A ring \((R, +, \cdot)\) is a set \(R\) together with two binary operations \(+\) and \(\cdot\) (we’ll call them addition and multiplication, respectively), such that these properties hold:

-

\(R\) forms an abelian group under \(+\), with \(0\) as the additive identity.

-

Associativity under \(\cdot\): For all \(x, y, z \in R\), \((x \cdot y) \cdot z = x \cdot (y \cdot z)\).

-

Identity for \(\cdot\): There exists an element \(1 \in R\) (called the multiplicative identity) for which \(1 \ne 0\), such that \(x \cdot 1 = 1 \cdot x = x\) for all \(x \in R\).

-

Distributivity: For all \(x, y, z \in R\), \(x \cdot (y + z) = x \cdot y + x \cdot z\) and \((x + y) \cdot z = x \cdot z + y \cdot z\).

If \(R\) additionally satisifies the commutative property for \(\cdot\), i.e., for all \(x, y \in R\) we have \(x \cdot y = y \cdot x\), then it is a commutative ring.

We have already seen \(\ZZ\) and \(\ZZ_n\), both of which also happen to be commutative rings.

Fields

Intuitively, a field supports the notion of addition, subtraction, multiplication, and division.

A field is a commutative ring in which all nonzero elements have multiplicative inverses.

-

The set of rationals \(\QQ\) under addition and multiplication forms a field, since for every \(p/q \in \QQ\), the additive inverse is \(-p/q\) and the multiplicative inverse is \(q/p\).

-

The sets \(\RR\) under addition and multiplication form a field, since for every \(x \in \RR\), the additive inverse is \(-x\) and the multiplicative inverse is \(1/x\).

-

\(\ZZ_n\) under addition and multiplication forms a field, since for every \(x \in \ZZ_n\), the additive inverse is \(n-x\) and the multiplicative inverse is \(x^{-1}\) (which we can compute using the extended Euclidean algorithm).

Divisibility and quotient rings

The concept of divisibility, originally introduced for the integers \(\ZZ\) can be generalized to any ring.

Let \(a\) and \(b\) be elements of a ring \(R\) with \(b \ne 0\). We say that \(b\) divides \(a\), or that \(a\) is divisible by \(b\), if there is an element \(c \in R\) such that $$a = b \cdot c.$$

Recall that an integer is called a prime if it has no nontrivial factors. What is a trivial factor? We can “factor” any integer by writing it as \(a = 1 \cdot a\) and as \(a = (-1)(-a)\), so these are trivial factorizations. What makes them trivial is the fact that \(1\) and \(-1\) have multiplicative inverses.

In general, if \(R\) is a ring and if \(u \in R\) is an element that has a multiplicative inverse \(u^{-1} \in R\), then we can factor any element \(a \in R\) by writing it as \(a = u^{-1} \cdot (u a)\). Elements that have multiplicative inverses and elements that have only trivial factorizations are special elements of a ring, so we give them special names.

Let \(R\) be a ring. An element \(u \in R\) is called a unit if it has a multiplicative inverse, i.e., if there is an element \(v \in R\) such that \(u \cdot v = 1\).

An element \(a\) of a ring \(R\) is said to be irreducible if \(a\) is not itself a unit and if in every factorization of a as \(a = b \cdot c\), either \(b\) is a unit or \(c\) is a unit.

We have noted in the previous lecture notes that the integers \(\ZZ\) are uniquely factorizable (due to the fundamental theorem of arithmetic). Not every ring has this important unique factorization property though, but those that do are called unique factorization domains (UFDs).

Polynomial rings

Let \(K\) be a field (or more generally, a commutative ring). We define a polynomial in \(x\) over \(K\) as an expression of the form $$f(x) = a_n x^n + \cdots + a_2 x^2 + a_1 x + a_0$$ where each coefficient \(a_i \in K\) and \(n \ge 0\). The degree of \(f(x)\), denoted by \(\deg f(x)\) is the largest integer \(m\) such that \(a_m \ne 0\). If the leading coefficient \(a_m\) is equal to \(1\), then \(f(x)\) is monic.

For this section, we let \(K\) be any arbitrary field. The polynomial ring \(K[x]\) is the ring formed by the set of all polynomials in \(x\) where the coefficients come from \(K\). The associated operations are the usual polynomial addition and multiplication, but coefficients are computed in \(K\).

Let \(f(x) \in K[x]\) be a polynomial of degree at least \(1\). Then \(f(x)\) is said to be irreducible over \(K\) if it cannot be written as the product of two polynomials in \(K[x]\), each of positive degree.

Like the ring of integers, the division theorem also applies to polynomials (if you remember how to divide one polynomial by another back in high school, this is exactly what we do here):

If \(g(x), h(x) \in K[x]\), with \(h(x) \ne 0\), then ordinary polynomial long division of \(g(x)\) by \(h(x)\) yields unique polynomials \(q(x), r(x) \in K[x]\) such that $$g(x) = q(x) h(x) + r(x),$$ where \(\deg r(x) < \deg h(x)\). Here, \(q(x)\) is the quotient and \(r(x)\) is the remainder.

Rings of this sort that have a “division with remainder” algorithm are called Euclidean domains.

The polynomial ring \(K[x]/\gen{m(x)}\) consists of polynomials in \(K[x]\) of degree less than \(n = \deg f(x)\), where addition and multiplication are done modulo \(m(x)\).

We can now define common divisors and greatest common divisors in \(K[x]\).

A common divisor of two elements \(a, b \in K[x]\) is an element \(d \in K[x]\) that divides both \(a\) and \(b\). We say that \(d\) is a greatest common divisor of \(a\) and \(b\) if every common divisor of \(a\) and \(b\) also divides \(d\).

The greatest common divisor of \(x^2 - 1\) and \(x^3 + 1\) is \(x + 1\). Notice that $$x^2-1 = (x+1) (x-1) \quad\text{and}\quad x^3+1 = (x+1)(x^2-x+1)$$ so \(x+1\) is a common divisor. I will leave it to you to check that it is the greatest common divisor.

Finite fields

Finite fields (also called Galois fields) are fields with a finite number of elements. Any finite field has order \(p^n\) (thus contains \(p^n\) elements) for some prime \(p\) and integer \(n \ge 1\). For every prime power order \(p^n\), there is a unique finite field of order \(p^n\). We denote this field by \(\FF_{p^n}\) or sometimes \(\mathrm{GF}(p^n)\). When \(n = 1\), we get \(\ZZ_p\), the ring of integers modulo a prime.

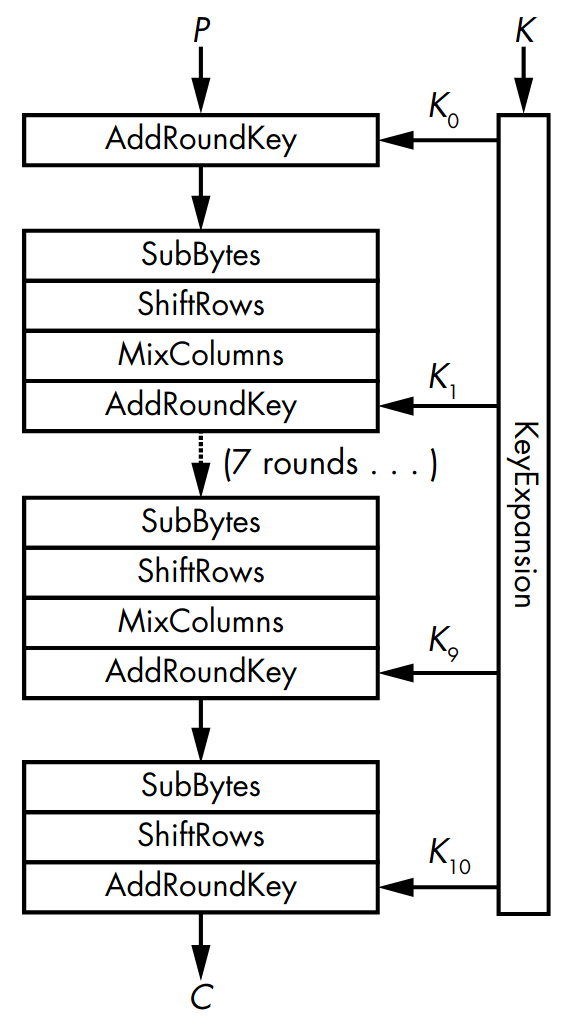

For example, AES uses the finite field \(\FF_{256}\), defined by the polynomial ring \(\ZZ_2[x]/\gen{m(x)}\) where \(m(x) = x^8 + x^4 + x^3 + x + 1\).

Finite field arithmetic

Let \(m(x)\) be a monic irreducible polynomial over \(\FF_2\). Then the finite field with \(2^n\) elements \(\FF_{2^n}\) is defined as the polynomial ring \(\FF_2[x]/\gen{m(x)}\), where \(m(x)\) is the modulus such that \(n = \deg m(x)\).

Addition in \(\FF_{2^n}\) is the usual polynomial addition where the coefficients are added over \(\FF_2\). Since \(-1 = 1 ;\text{(in $\FF_2$)}\), subtraction is exactly the same as addition. As the order of the finite field is power of \(2\), we can represent an element of \(\FF_{2^n}\) as bits (where the \(i\)th least significant bit is set if there is an “\(x^i\)” term). Then addition/subtraction is identical to the XOR operation.

In “normal arithmetic” we would have a term like \(2x^6\), but this

becomes \(0x^6\) when we reduce the coefficients modulo \(2\).

$$\begin{align}

&(x^6 + x^4 + x + 1) + (x^7 + x^6 + x^3 + x) \\

&= x^7 + x^6 + x^6 + x^4 + x^3 + x + x + 1 \\

&= x^7 + x^4 + x^3 + 1

\end{align}$$ We can represent \(x^6 + x^4 + x + 1\) and

\(x^7 + x^6 + x^3 + x\) in binary as 01010011 and 11001010

respectively. Then we have:

$$\texttt{01010011} \oplus \texttt{11001010} = \texttt{10011001}$$

which corresponds to \(x^7 + x^4 + x^3 + 1\).

Multiplication in \(\FF_{2^n}\) is done using polynomial multiplication and then dividing the product by the modulus, thus the remainder is the actual product.

Suppose we’re working over \(\FF_2[x]/\gen{x^8+x^4+x^3+x+1}\). To multiply \(x^6 + x^4 + x + 1\) and \(x^7 + x^6 + x^3 + x\): $$\begin{align} &(x^6 + x^4 + x + 1) (x^7 + x^6 + x^3 + x) \\ &= (x^{13} + x^{12} + x^9 + x^7) + (x^{11} + x^{10} + x^7 + x^5) + (x^8 + x^7 + x^4 + x^2) + (x^7 + x^6 + x^3 + x) \\ &= x^{13} + x^{12} + x^{11} + x^{10} + x^9 + x^8 + x^6 + x^5 + x^4 + x^3 + x^2 + x \end{align}$$ and then $$\begin{align} &(x^{13} + x^{12} + x^{11} + x^{10} + x^9 + x^8 + x^6 + x^5 + x^4 + x^3 + x^2 + x) \bmod (x^8+x^4+x^3+x+1) \\ &= 1. \end{align}$$

Getting the multiplicative inverse of an element in \(\FF_{2^n}\) can be done using the extended Euclidean algorithm, and division in \(\FF_{2^n}\) is defined by \(a / b = a b^{-1}\) for any \(a, b \in \FF_{2^n}\).

References

-

Hoffstein, Jeffrey, Jill Pipher, and Joseph H. Silverman. 2014. An Introduction to Mathematical Cryptography. 2nd ed. Springer-Verlag New York. http://link.springer.com/10.1007/978-0-387-77993-5.

-

Menezes, Alfred J., Paul C. van Oorschot, and Scott A. Vanstone. 1996. Handbook of Applied Cryptography. CRC Press.

Computational complexity

Relevant readings: Sipser 7.1–7.4, 10.2, 10.6, Menezes, et al. 3.1

Time complexity

If you recall your CSci 30 or MSys 30 class, the time complexity or running time of an algorithm refers to the number of steps the algorithm makes given the size of the input. More often than not, we don’t really need the exact number of steps as minor changes in the implementation can change it, so we only care about how fast it grows as the input size increases.

Asymptotic analysis

When we are dealing with input sizes that are large enough so that only the rate of growth of the running time matters, we are looking at the asymptotic efficiency of an algorithm.

Instead of finding the exact expression that describes the running time, we can simply use asymptotic notation to express the running time of algorithms. In a nutshell, asymptotic notation suppresses constant factors and lower-order terms. We don’t care about constant factors because they are too system- and implementation-dependent. We also don’t care about lower-order terms because these terms will become irrelevant as the size becomes large, and we only care about efficiency for large input sizes.

Suppose we have an algorithm whose running time is defined by \(T(n) = 6n^3 + 2n^2 + 20n + 45\). For large values of \(n\), the highest order term \(6n^3\) will overshadow the other terms. Disregarding the coefficient \(6\), we say that \(T\) is asymptotically at most \(n^3\).

In terms of big-\(O\) notation, we can also say that \(T(n) = O(n^3)\).

We say that \(f(n)\) is \(O(g(n))\) (pronounced “big-oh of \(g\) of \(n\)” or sometimes just “oh of \(g\) of \(n\)”) if and only if there exist positive constants \(c\) and \(n_0\) such that \(f(n) \le c g(n)\) for all \(n \ge n_0\).

-

Suppose we have \(3n^2+5n\).

Among the terms, \(3n^2\) is the fastest-growing. Dropping the constant factor \(3\), we have \(3n^2+5n = O(n^2)\).

-

Suppose we have \(n + 2\sqrt{n} + 10\).

Note that \(\sqrt{n}\) can be rewritten as \(n^{1/2}\), so we now have \(n + 2n^{1/2} + 10\). Since \(n\) grows faster than both \(2n^{1/2}\) and \(10\), we conclude that \(n + 2\sqrt{n} + 10 = O(n)\).

-

Suppose we have \(n \log_3 n + 4n \log_7 n + n + 1\).

When dealing with terms containing logarithms, it would be easier to manipulate if the logs have the same base. We then convert all logs to base \(2\) using the change of base formula, so we get \(\frac{1}{\log 3} \cdot n \log n + \frac{1}{\log 7} \cdot n \log n + n + 1\). Ignoring constant factors, we can see that the \(n \log n\) term grows the fastest among the terms.

Therefore \(n \log_3 n + 4n \log_7 n + n + 1 = O(n \log n)\).

(Pro-tip: Logarithms with different bases differ only by a constant factor, so as long as we are concerned with asymptotic notation, the base of the logarithm is irrelevant.)

-

Suppose we have \(2 \cdot 3^{n-2} + 6 \cdot 2^{n+1} + 12n^4\).

Ignoring constant factors, we can see that the \(3^{n-2}\) term grows the fastest among the terms. Notice that \(3^{n-2}\) and \(2^{n+1}\) have hidden constant factors, i.e., \(3^{n-2} = 3^n \cdot 3^{-2}\) and \(2^{n+1} = 2^n \cdot 2\).

Therefore, \(2 \cdot 3^{n-2} + 6 \cdot 2^{n+1} + 12n^4 = O(3^n)\).

(Pro-tip: Unlike logarithms, the base of exponentials does matter!)

Classifying algorithms

Algorithms can be classified according to their asymptotic running time. We can establish a sort of hierarchy where we arrange these functions in terms of growth rate. Table [tbl:timecmp] shows the common time complexities, from slowest-growing to fastest-growing. Algorithms that rely on brute force (e.g., those involving enumerating subsets and permutations) often run in exponential or factorial time.

| notation | name | examples |

|---|---|---|

| \(O(1)\) | constant | adding two integers |

| \(O(\log n)\) | logarithmic | binary search |

| \(O(n^c)\) where \(0<c<1\) | fractional power | primality testing by trial division |

| \(O(n)\) | linear | iterating through a list |

| \(O(n \log n)\) | linearithmic | merge sort, quicksort |

| \(O(n^2)\) | quadratic | checking all possible pairs |

| \(O(n^3)\) | cubic | naïve matrix multiplication |

| \(O(n^c)\) where \(c > 1\) | polynomial | nested for-loops (in most cases) |

| \(O(c^n)\) where \(c > 1\) | exponential | enumerating subsets |

| \(O(n!)\) | factorial | enumerating permutations |

In summary, the rule of thumb is: logarithms grow more slowly than polynomials and polynomials grow more slowly than exponentials.

Polynomial vs. pseudo-polynomial time

If an algorithm’s running time grows polynomially with the input size (i.e., its running time is a polynomial function with respect to the input size), then we say that the algorithm runs in polynomial time. Most of the time (pun not intended), we analyze the running time of algorithms with respect to the size of the input, such as the length of the array or string, and the number of bits or digits needed to represent an integer.

However, there are algorithms (specifically, numeric algorithms) whose running times grow polynomially with the numeric value of the input, but not necessarily with the size. We call such algorithms as pseudo-polynomial. The simple reason is that the numeric value of the input is exponential in the input size. For example, the largest value for an \(n\)-bit (unsigned) integer is \(2^n - 1\).

The following section shows a more concrete example.

Segue: fast modular exponentiation

A naïve way to do modular exponentiation, i.e., to compute \(a^p \bmod N\) where \(p \ge 0\) is an integer, is to multiply \(a\) by itself \(p\) times while reducing the result modulo \(N\). In pseudocode:

NaïveModExp(\(a\), \(p\), \(N\)):

1: \(x \gets 1\)

2: for \(i \gets 1 \ \textbf{to}\ p\)

3: \(x \gets (a\cdot x) \bmod N\)

4: return \(x\)

We can see that NaïveModExp runs in \(O(p)\) time, or more concretely, it takes \(p-1\) multiplications to raise an integer to \(p\). To put it another way, since \(p\) is \(m = \left\lfloor \log_2 p \right\rfloor + 1\) bits long, we can say that NaïveModExp runs in \(O(2^m)\) time. Since the running time of NaïveModExp is actually exponential in the number of bits (the input size) even if it is polynomial in the value of the input \(p\), it is a pseudo-polynomial time algorithm.

However, this approach becomes problematic when \(p\) gets very large, since RSA in practice involves raising integers to very large exponents. A better approach is to consider exponentiation as a series of repeated squarings. If we use the fact that $$a^p = a^{p/2} \cdot a^{p/2} = \left(a^{p/2}\right)^2 = \left(a^2\right)^{p/2},$$ then we can compute, for example, \(a^8\) in just \(3\) multiplications. If the exponent \(p\) is odd, we can use the property $$a^p = a \cdot a^{p-1} = a \cdot \left(a^2\right)^{(p-1)/2}.$$ Thus we have:

FastModExp(\(a\), \(p\), \(N\)):

1: if \(p \mathrel{=}0\)

2: return \(1\)

3: else if \(p\) is even

4: return FastModExp(\(a^2\), \(p/2\), \(N\))

5: else

6: \(x \gets\) FastModExp(\(a^2\), \((p-1)/2\), \(N\))

7: return \((a \cdot x) \bmod N\)

Now, FastModExp runs in \(O(\log p)\) time since the exponent gets progressively halved until it reaches \(1\). Alternatively, FastModExp runs in \(O(m)\) time where \(m\) is the number of bits of \(p\). Unlike our naïve pseudo-polynomial time algorithm, the running time of FastModExp is actually polynomial in the number of bits, hence FastModExp can be considered as a polynomial-time algorithm.

The classes P and NP

\(\P\) (“polynomial time”) is the class of problems that are solvable in polynomial time, while \(\NP\) (“nondeterministic polynomial time”) is the class of problems whose solutions can be verified in polynomial time.

A nice property of \(\P\) is that it is closed under composition, which means if an algorithm makes a polynomial amount of calls to another polynomial-time algorithm, then the entire algorithm still takes polynomial time.

The main question: P vs. NP

Time to address the elephant in the room. Suppose a solution to some problem can be checked in polynomial time. Does that imply we can also solve that same problem in polynomial time? We don’t know yet.

We know for sure that there are more problems for which solutions can be verified efficiently than problems that can be solved efficiently. However it may be entirely possible that \(\P = \NP\) because we’re unable to prove that there exists some problem in that’s not in \(\P\).

As of now, the common consensus points to \(\P \ne \NP\), mainly because efforts have been underway for the past 60 or so years to find efficient solutions to problems in \(\NP\), but without much success. \(\P \ne \NP\) means that the only solution to \(\NP\) problems are brute force algorithms.

Reducibility

If we can “reduce” some problem \(A\) to another problem \(B\), it means that we use an algorithm that solves problem \(B\) as part of an algorithm that solves problem \(A\). Basically we treat the algorithm for problem \(B\) as a “black box.”

We’ll modify this concept a bit to take efficiency into account. If we can efficiently reduce problem \(A\) to problem \(B\), an efficient solution to problem \(B\) can be used to solve \(A\) efficiently.

Problem \(A\) is polynomial-time reducible to problem \(B\), written as \(A \le_p B\) if there exists an algorithm \(f\) that transforms every instance of \(A\) into every instance of \(B\) in polynomial time. We call \(f\) the polynomial-time reduction of \(A\) to \(B\).

The notion of (in)tractability

We want our algorithms to scale well when the input size gets larger, so we rule out those that run in at least exponential time. Thus:

An algorithm is efficient if it runs in polynomial time.

It reinforces the notion that a particular problem may not have an efficient algorithm to solve it. If an efficient algorithm exists that solves a particular problem, then we call that problem tractable. On the other hand, if no efficient algorithm exists that solves a particular problem, then that problem is intractable.

Most intractable problems only have an algorithm that solves them, those that involve brute force, which are infeasible for anything other than really small inputs.

Most of the time, efficient algorithms are feasible enough for practical applications. For example, the way Python multiplies very large integers is by using Karatsuba’s algorithm after a certain threshold of number of digits is reached (i.e., the crossover point \(n_0\)).

However, there are algorithms whose crossover point \(n_0\) is “astronomically” large, meaning the stated efficiency cannot be attained until the input becomes sufficiently large that they are never used in practice. As such they are called galactic algorithms. One example is the fastest-known algorithm to multiply two numbers, whose efficiency can only be achieved if the numbers have at least \(2^{1729^{12}}\) bits (or at least \(10^{10^{38}}\) digits) long, which is much greater than than the number of atoms in the known universe!

In complexity theory, intractable problems belong to the class \(NP\).

Intractable problems in number theory

Modern cryptography exploits the fact that some problems in number theory have no efficient solutions. Here is a selection of number-theoretic problems of cryptographic relevance, taken from (Menezes, Oorschot, and Vanstone 1996):

-

The integer factorization problem

Given a positive integer \(n\), find its prime factorization.

-

The discrete logarithm problem

Given a prime \(p\), a generator \(g\) of \(\ZZ_p^\ast\), and an element \(y \in \ZZ_p^\ast\), find the integer \(x\) such that \(g^x \equiv y \pmod{p}\).

-

The RSA problem

Given a positive integer \(n = pq\), where \(p\) and \(q\) are distinct odd primes, a positive integer \(e\) such that \(\gcd\left(e, (p-1)(q-1)\right) = 1\), and an integer \(c\), find an integer \(m\) such that \(m^e \equiv c \pmod{n}\).

-

The Diffie–Hellman problem

Given a prime \(p\), a generator \(g\) of \(\ZZ_p^\ast\), and elements \(g^a \bmod p\) and \(g^b \bmod p\), find \(g^{ab} \bmod p\).

It is not yet known if these problems are NP-complete, meaning all problems in can be reduced to these problems that are also in . For instance, if we have discovered an efficient algorithm to factor integers, then it doesn’t necessarily imply that \(\P \ne \NP\) since there’s no way yet to reduce any problem in into the integer factorization problem. However, if anyone has come up an efficient algorithm to solve an NP-complete problem, then we can use that algorithm to construct an efficient algorithm to factor integers.

Randomized algorithms

From the name itself, a randomized algorithm (also called probabilistic algorithm), incorporates randomness during its execution. Think of it like an algorithm that rolls a dice or flips a coin to make decisions on how it would proceed. As such, for each fixed input, a randomized algorithm may perform differently from run to run. The variation comes from the deliberate random choices made during the execution of the algorithm; but not from a distribution assumption of the input. On the other hand, we have deterministic algorithms that don’t use randomness at all. There are hundreds of computational problems for which a randomized algorithm leads to a more efficient solution, and sometimes to the only efficient solution.

Two flavors of randomized algorithms

Most randomized algorithms fall into two classes:

-

Las Vegas algorithms

These are guaranteed to return the correct output, but likely to run in polynomial time. In other words, these could run for a very long time (i.e., the running time is unbounded).

An example of a Las Vegas algorithm is randomized quicksort.

-

Monte Carlo algorithms

These are guaranteed to run in polynomial time, but likely to return the correct output (i.e., there’s a chance that the output is wrong).

An example of a Monte Carlo algorithm is the Miller–Rabin primality test.

There’s a way to convert a Las Vegas algorithm into Monte Carlo; all we need to do is to run it a fixed number of iterations and if the algorithm finds an answer it outputs the answer, or a \(\bot\) denoting failure if otherwise. The converse is not necessarily true, as there’s no known general way to convert Monte Carlo algorithms into Las Vegas.

The class of problems solvable by a Las Vegas algorithm is called \(\ZPP\) (“zero-error probabilistic polynomial time”), while problems solvable by a Monte Carlo algorithm belong to the classes called \(\RP\) (“randomized polynomial time”) and \(\BPP\) (“bounded-error probabilistic polynomial time”).

Running time of randomized algorithms

How do we measure the running time of a randomized algorithm? Consider the following two scenarios that illustrate the two possible approaches to measuring running time:

-

You publish your algorithm and a bad guy picks the input, then you run your randomized algorithm.

-

You publish your algorithm and a bad guy picks the input, then the bad guy chooses the randomness (or “rigs the dice” so to speak) in your randomized algorithm.

In the first scenario, the running time is a random variable as it depends on the randomness that your algorithm employs, so here it makes sense to reason about the expected running time. In the second scenario, the running time is not random since we know how the bad guy will choose the randomness to make our algorithm suffer the most, so we can reason about the worst-case running time.

It’s important to keep in mind that, even with randomized algorithms, we are still considering the worst-case input, regardless of whether we’re computing expected or worst-case running time, since in both scenarios we assume that a bad guy picks the input.

The expected running time is not the running time when given an expected input! We are taking the expectation over the random choices that our algorithm would make, not an expectation over the distribution of possible inputs.

One-way and trapdoor functions

One-way functions are functions that are “easy” to compute but “hard” to invert, which makes them an extremely important cryptographic primitive. While there isn’t a definite proof yet of the existence of one-way functions because proving it involves resolving the \(\P\) vs. \(\NP\) problem, we just assume for the meantime that one-way functions exist.

One-way functions are like powdered three-in-1 instant coffee mix. It’s easy enough to mix their individual constituents (coffee, creamer, sugar) into a powdered mixture, but if you start off with the powdered mixture, it would be very difficult (but not impossible) to “reverse” the mixing process by separating each grain or particle of coffee/creamer/sugar.

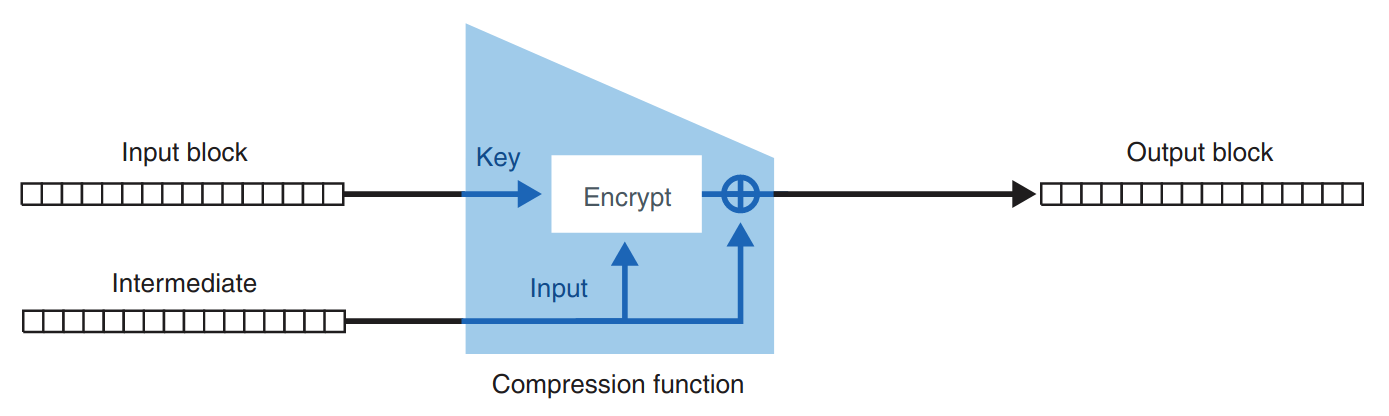

In particular, one-way functions are used to construct hash functions, those that take in some input and returns an output of a fixed size. For example, a hash function \(H\) may map the input string as $$H(\s{hash browns}) = \s{c97b3f584d47619185635bb3a6ac9ce2}.$$ To be considered “one-way” nobody should be able to recover the string just from the output , so its “inverse function” should be infeasible to implement. For this reason, one-way functions are used for symmetric-key primitives, and cryptographic mechanisms dealing with integrity and authentication.

Trapdoor functions

If we allow one-way functions to be efficiently inverted with some special knowledge, but still maintaining the “easy” to compute but “hard” to invert property, then we get another family of functions called trapdoor functions. They are called like that since it’s easy to fall through a trapdoor, but it’s very hard to climb back out and get to where you started unless you have a ladder.

For example, the RSA cryptosystem uses the trapdoor function $$f(x) = x^e \bmod n.$$ If we can find the factorization of \(n\), we can compute \(\phi(n)\), so the inverse of \(e\) can be computed \(d = e^{-1} \bmod {\phi(n)}\).

References

- Menezes, Alfred J., Paul C. van Oorschot, and Scott A. Vanstone. 1996. Handbook of Applied Cryptography. CRC Press.

Computational security

Relevant readings: Katz and Lindell 3.1–3.4

Defining the impossible

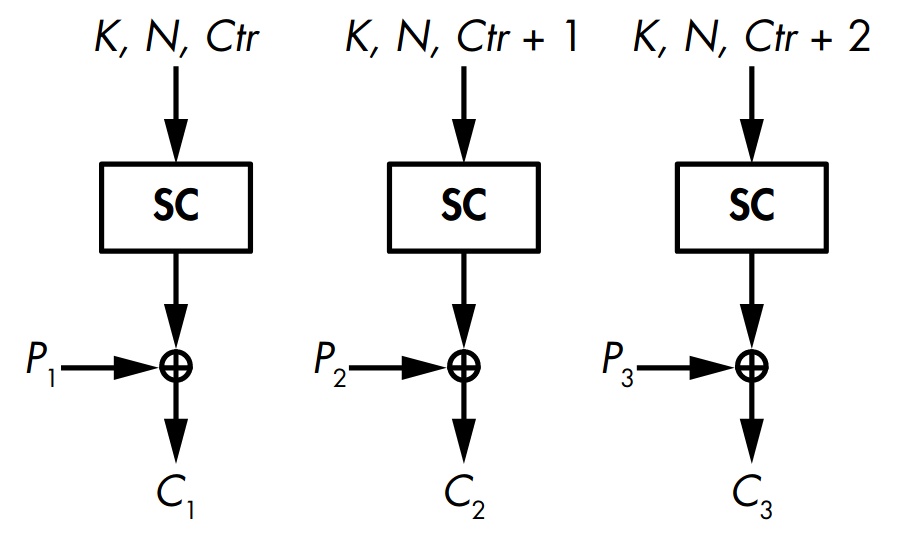

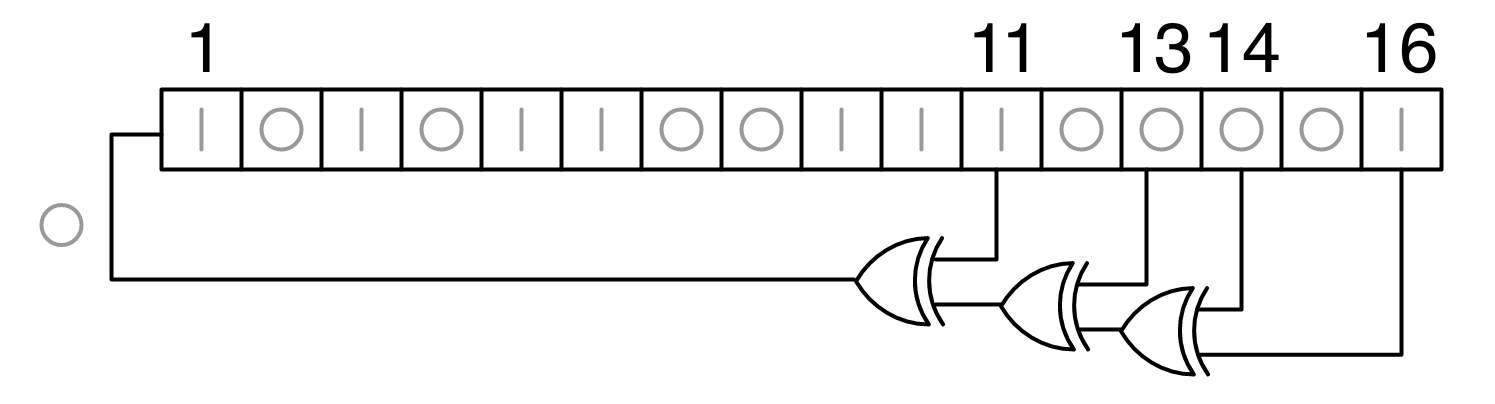

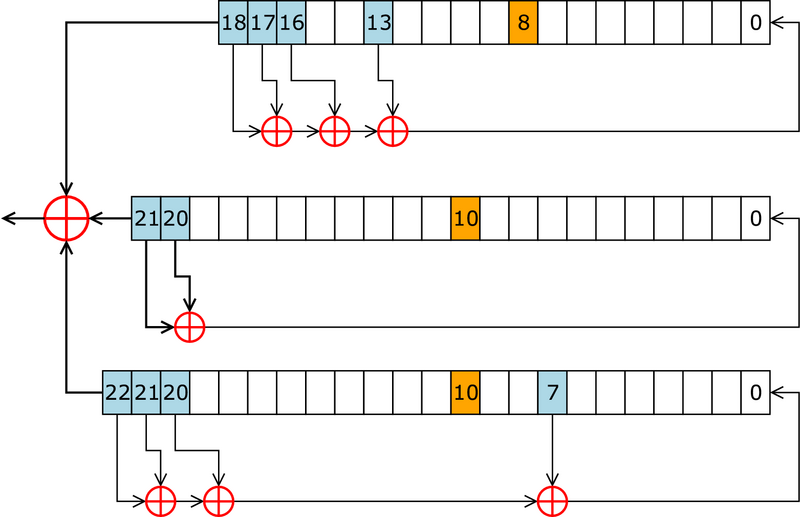

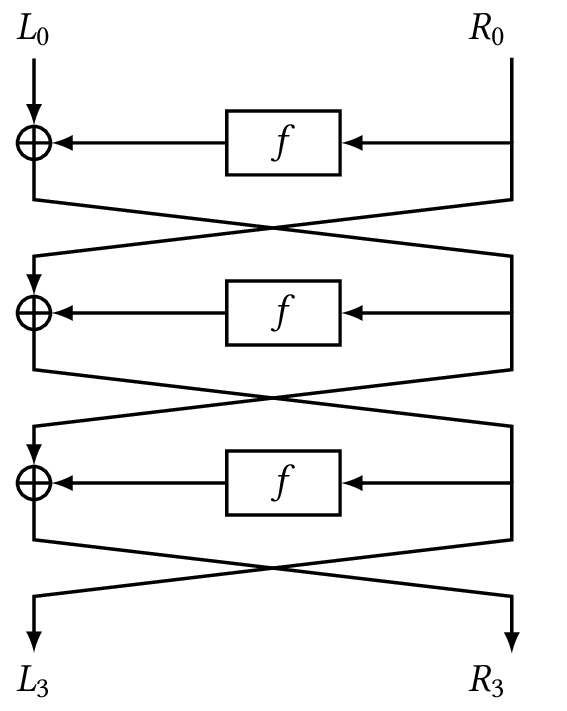

Back in Lecture 2, we introduced the notion of perfect secrecy. Perfect secrecy is what we also call information-theoretic security, where absolutely no information about the plaintext is leaked even if the attacker has unlimited computational power. But for practical purposes, perfect secrecy is a bit “too strong” because it would consider insecure an encryption scheme for which a successful attack would take trillions of years. The average person would not be able to witness such an attack in their lifetime for them to consider the scheme as insecure.